From Audio to Text: AI, data privacy and asynchronous processing

As digital systems become more embedded in our lives, protecting personal data is no longer optional.

That’s why we’re building ethical, sustainable digital services that protect your data and stand the test of time.

Recently, we took on an exciting challenge: designing an AI solution that safeguards user privacy and technological sovereignty.

The project

Rethinking audio-to-text transcription with a privacy-first approach.

Instead of transferring sensitive audio files to external servers, we offer a decentralized architecture that enables each organization to deploy the tool directly on its existing infrastructure.

This approach addresses two strategic objectives:

Ensuring maximum data privacy:

whether due to regulatory requirements, data sensitivity or an organization’s strategy, we want to prevent our recordings from being accessed by others or ending up on third-party platforms. This leads to increased autonomy.

Promoting ecodesign practices:

by reusing existing hardware, we reduce resource consumption, avoid planned obsolescence and minimize environmental impact.

The principles of our solution

![]() Every member of the organization can easily convert an audio file into written text.

Every member of the organization can easily convert an audio file into written text.

![]() The software should allow multiple users to access the service simultaneously, without limitations or constraints.

The software should allow multiple users to access the service simultaneously, without limitations or constraints.

![]() Instant transcription is not strictly necessary; users can submit their files and retrieve the transcriptions later at their convenience.

Instant transcription is not strictly necessary; users can submit their files and retrieve the transcriptions later at their convenience.

![]() The solution must integrate seamlessly with the company’s existing infrastructure (its current server) and generate no additional costs after the initial development phase.

The solution must integrate seamlessly with the company’s existing infrastructure (its current server) and generate no additional costs after the initial development phase.

The software

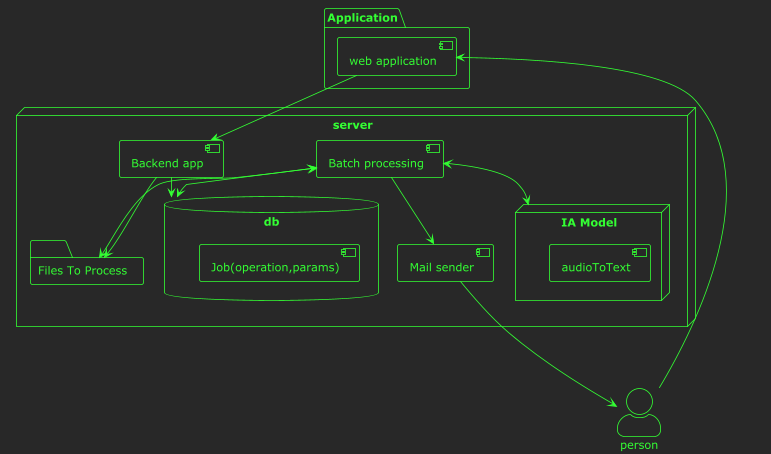

These requirements have been translated into the following implementation and design:

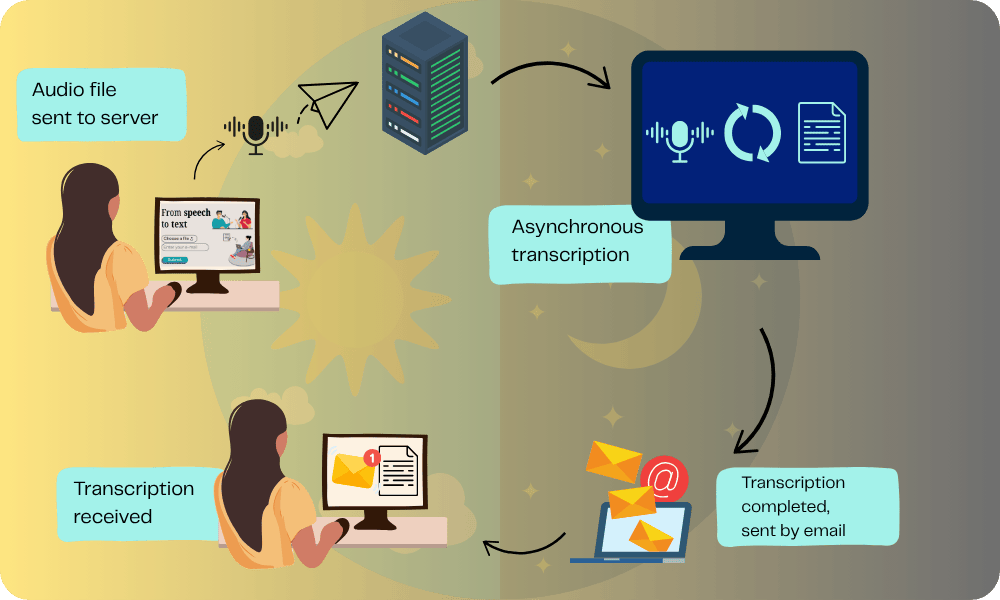

- Each employee logs into the application’s website and uploads their audio file for transcription, along with their email address to receive the transcription.

- The file is saved on the server.

- During the night, when the company’s server experiences lower demand, a batch process is triggered. A transcription is generated for each file uploaded throughout the day, utilizing an AI module optimized for transcriptions.

- Once the transcription is complete, the server emails the result to the user.

Application component diagram:

Methodology

For the development of this application, we employed an agile methodology and integrated the theoretical framework of the GREENSOFT model.

A Sustainability Journal was created to document each decision and its implications for the product’s sustainability.

This approach allows for:

- integrate sustainability concerns and requirements into the development process

- maintain a record of changes and implementations made, along with their eco-design impacts

Eco-design

The criteria

We cannot overlook the environmental impacts of AI models.

That’s why, in developing our solution, we have identified two main eco-design objectives:

- Minimize the environmental impact of using an AI model

- Use open AI models to allow easy replacement with more efficient and lighter versions as they become available

How do we achieve our sustainability goals?

Prioritize efficiency over immediate response

Prioritize efficiency over immediate response

The file sending process is decoupled from their processing.

This means:

- The use or reuse of less powerful servers. While transcription may take longer, this approach avoids the need to create new servers or incur additional costs.

- Limiting the impact on material resource consumption. Most software operates during peak energy demand periods. We have chosen to run our service at night, optimizing server usage without compromising service quality.

- Decoupling file upload and processing enables fully shutting down the submission service at night, optimizing server resource use outside business hours.

AI model selection

AI model selection

- With the goal of autonomy and independence, we have chosen open AI models that can be reused.

- We selected pre-trained models specialized in specific languages, enabling better transcription even with smaller-sized models.

- After testing, we identified the lightest model capable of producing acceptable transcriptions. Using more powerful models than necessary is a major contributor to environmental impact.

What remains to be done?

Functionalities perspective:

- Expand potential AI models: beyond transcription, we are considering applications such as text translation or summarizing large documents.

- By designing the AI model as an independent module, we can adapt it to any task requiring asynchronous processing.

Eco-design perspective:

- Quantify the impact: it would be valuable to measure the carbon emissions generated per minute of transcribed audio. To do this, we will rely on the SCI index from the Green Software Foundation. This approach will allow precise quantification of the chosen model’s impact. Since our solution is deployed internally, we have access to all necessary data to perform this calculation.

- Meet GR491 essential criteria: as a pilot project, only a few criteria were considered during development. A more comprehensive analysis will be needed if the application evolves further.

Preserving your data privacy and sovereignty

Through the development of this software, we have demonstrated that it is possible to deploy services using AI models on standard servers, without relying on external services, thereby preserving data privacy and sovereignty. Moreover, this can be achieved without incurring additional costs once the system is implemented.

Moreover, by examining the actual use of technological tools, we can identify direct impacts linked to their implementation. In our case, by choosing to process transcriptions asynchronously and with a delay, we are able to reuse existing servers that wouldn’t have been sufficient if immediate responses were required.

This approach illustrates how thoughtful planning around deployment can lead to more efficient, resource-saving, and environmentally friendly solutions.